Examining Technical Processes for GCP

In your role as an architect, you’ll be involved in numerous technical procedures, some of which were covered in earlier chapters, like continuous integration/continuous delivery (CI/CD) and post-mortem analysis. This chapter will delve deeper into these and other processes like software development lifecycle management, testing and validation, and business continuity and disaster recovery planning. Our aim is to present a comprehensive understanding of these technical processes, with an emphasis on their connection to business goals. For a more technical exploration, including the use of tools like Jenkins in CI/CD, please refer to the subsequent discussions.

Software Development Lifecycle Plan

Section titled “Software Development Lifecycle Plan”- Analysis

- Design

- Development

- Testing

- Deployment

- Documentation

- Maintenance

This is called a cycle because at the end of the process, we iterate over again from our business and technical needs.

Analysis and Examination

Section titled “Analysis and Examination”The analysis and requirements gathering phase is critical for a Google Cloud project to ensure the solution meets business needs. Key analysis activities include:

Evaluating the Business Problem - Work with stakeholders to fully understand the issues or opportunities being addressed. Drill into the pain points experienced by users and customers. Quantify the impacts on revenue, productivity, and other metrics. This foundational understanding guides the entire project.

Assessing Solution Options - With the problem scope clarified, brainstorm potential technical and process solutions. Leverage Google Cloud technologies like BigQuery, App Engine, and AI Platform for options. Estimate level of effort, costs, and benefits for each option.

Analyzing Requirements - Gather detailed requirements through sessions with stakeholders. Document user stories, edge cases, interfaces, reporting needs, and more. Prioritize must-have versus nice-to-have capabilities. Define MVP vs. longer term functionality.

Clarifying Technical Constraints - Determine limitations imposed by data sources, legacy systems, regulations, and other factors. Identify potential blockers and dependencies.

Defining the Solution Scope - Synthesize the research into high-level solutions, priorities, delivery timelines, and measures of success. Build consensus among stakeholders on what will be delivered.

Careful analysis and requirements gathering reduces risk by aligning project plans with business needs. The deliverables enable constructive discussions on tradeoffs and set clear expectations before committing to a solution.

Problem Scoping

Section titled “Problem Scoping”Problem scoping involves clearly defining the issues or opportunities to be addressed by the project. This requires understanding the current state and desired future state from the user’s perspective. Effective scoping frames the problem statement and bounds the scope to reasonable parameters. It identifies relevant systems, stakeholders, processes, and objectives. Well-defined problem scoping sets the foundation for the solution requirements and design. It focuses efforts on the core issues rather than trying to boil the ocean. The analysis should yield a narrowly targeted problem statement that the project aims to resolve for a specific set of users.

Domain knowledge from teams with direct experience is critical for accurate problem scoping. For example, having customer support agents who regularly interface with users participate in requirements gathering will surface pain points that internal teams may overlook. Operations engineers who maintain existing systems can identify technical limitations and dependencies. Subject matter experts like data scientists and UX designers can provide realistic assessments of proposed solutions. Involving these domain experts validates assumptions and brings real-world perspectives to scope the problem appropriately. Direct engagement with the right staff builds comprehensive understanding to frame the problem statement and requirements.

Evaluating Options

Section titled “Evaluating Options”When evaluating solutions for Google Cloud projects, leveraging the platform’s comprehensive toolset and the team’s domain expertise is key. For example, if improving analytics processing time is the scoped problem, options would include migrating analytics to BigQuery for scalability, using Dataflow for streaming pipelines, and employing AI Platform for predictive modeling. Google engineers can provide guidance on capability, complexity, and costs of each option based on real customer engagements. The cloud support team can detail integration and migration considerations. Together, detailed problem scoping with domain knowledge of Google Cloud capabilities enables data-driven evaluation of solution options on metrics like time, cost, and quality. Evaluations based on Google’s experience and advice sets projects up for successful outcomes within reasonable constraints.

When well-aligned to the problem scope, commercial software can offer a faster and lower-risk alternative to custom development. For common needs like CRM, HR systems, or content management, COTS solutions have pre-built capabilities that can be configured versus built from scratch. This can significantly reduce project timelines and costs. COTS options should be considered when requirements closely match package functionality and limited customization is needed. However, COTS does bring constraints, like rigid workflows or license fees. Integration with other systems may be limited. Vendor dependence risks continuity. Before pursuing COTS, the team should evaluate fit, total cost of ownership, limitations, and vendor viability. Example COTS solutions that may merit consideration for applicable problems include Salesforce CRM, Workday HR, and Adobe Marketing Cloud.

Sometimes the optimal solution is to modify or extend existing applications vs. building new ones. This leverages prior investments and skills while incrementally improving capabilities. When evaluating options, modernization of legacy apps should be considered based on factors like remaining lifespan, technical debt, business value. Modifications may involve re-platforming, re-architecting databases or UIs, integrating new APIs and microservices. Google Cloud provides tools like Cloud Code and Migrate for Anthos to incrementally transform applications.

Greenfield development is advised when existing systems are highly outdated, fragmented, or limiting. Building from scratch enables creating optimal UX, modern tech stack, and cloud-native architecture. While resource-intensive, greenfield development removes legacy constraints and technical debt. It should be considered when no platform exists to meet business needs. Still, integration challenges with remaining legacy systems can add complexity.

Migrating existing apps to the cloud often requires modifications to enable cloud capabilities. Re-architecting for microservices, adding autoscaling, optimizing for serverless, and leveraging managed cloud services typically involves app changes. Google’s Migrate for Anthos can automate and modernize parts of the migration. But modifications are likely required to realize the full benefits of cloud. Assessing migration options should consider app changes needed versus “lift and shift”.

Cost Benefit Analysis

Section titled “Cost Benefit Analysis”Performing cost-benefit analysis is a critical skill for cloud architects to quantify the business case for technology investments. For Google Cloud projects, analyze costs across the full lifecycle including implementation, operations, maintenance, and sun-setting legacy systems. Consider both hard costs like gear, licenses, and engineering time as well as soft costs like training, change management, and risks/liabilities.

Weigh these costs against the expected strategic and tactical benefits for metrics like revenue, customer satisfaction, brand reputation, and competitive advantage. Assign tangible values to intangible benefits where possible. Involve finance teams to model total cost of ownership and return on investment.

For example, migrating analytics to BigQuery could require higher point-in-time costs for data migration, pipeline changes, added headcount, and training. But benefits like improved insights, faster customer intelligence, and developer productivity gains over time could outweigh the near-term expenses.

Likewise, replacing legacy CRM with Salesforce adds licensing costs but can enable sales productivity and pipeline visibility gains that ultimately pay for themselves. Focus beyond simple cost comparisons to fully capture benefits. Leverage Google Cloud Pricing Calculator to estimate usage costs. Consider Cloud Billing discounts like committed use and enterprise agreements to optimize spending. Building credible business cases via thorough cost-benefit analysis is essential for gaining executive buy-in on Google Cloud investments.

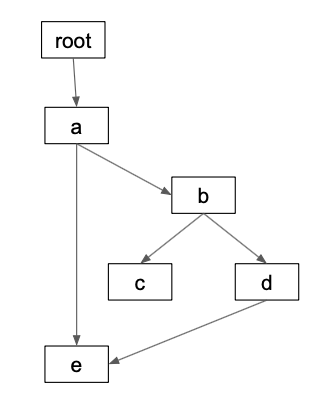

Design

Section titled “Design”The design phase is crucial for architecting scalable, secure, and robust Google Cloud solutions. Design involves translating requirements into technical specifications that serve as blueprints for development teams. Areas of focus include mapping architectures, data models, infrastructure topology, connectivity, integrations, UIs, APIs, security controls, and disaster recovery. Architectural diagrams are core design artifacts. Design decisions consider factors like time-to-market, TCO, extendability, ease of maintenance, and leveraging native Google Cloud building blocks. Well-constructed designs align technology means with business ends.

High-level Design

Section titled “High-level Design”High-level design defines the major architectural components and interactions for a solution. It establishes a conceptual blueprint prior to detailed technical specifications.

Identifying Major Components

Break down the overall system into core functional pieces. For example, an e-commerce platform may include:

Frontend app - Browser/mobile apps for shopping workflows

Backend app - Business logic, integrations, order processing

Databases - Products, customers, orders, transactions, analytics

Storage - Blobs for images, videos, documents

CDN - Cache static content closer to users

Payment gateway - Process credit cards securely

Notifications - Email, SMS, push for order status

Search/Recommendations - Catalog lookups and suggestions

Analytics - Usage statistics, metrics, reporting

Third-party APIs - Shipping, taxes, marketing, fraud detection

Component segregation promotes separation of concerns and modularity.

Defining Component Interfaces

Identify key connections and integrations between components. Specify input/output data formats and protocols.

This is crucial for high-volume transactional exchanges like orders passing between frontend, backend, databases, and payment gateways. Architect for scale during peak loads and traffic spikes like holiday sales.

Latency-sensitive UIs require responsive APIs. Asynchronous flows using message queues and caches help ensure snappy performance even during peaks. Indexed databases speed lookups for search and recommendations.

Component contracts establish clear expectations for interoperability. Strong interfaces decouple subsystems, enhancing maintainability and extensibility. Loose coupling eases onboarding of new technologies like Kubernetes and Knative serverless.

High-level designs focus on major building blocks, interactions, and flows. They help validate fit to requirements before diving into technical minutiae. Align components with Google Cloud services like Compute Engine, App Engine, and Dataflow for execution. Create modular architecture supported by clean interfaces and separation of concerns.

Detailed Design

Section titled “Detailed Design”The detailed design phase fleshes out specifications for each component. This includes:

Data structures - Define database schemas, table relationships, document formats, message payloads, etc. Optimize queries and indexes for performance. For example, denormalize tables for fast reads even if it duplicates some data.

Service accounts - Specify privileges, roles, and access controls. Follow principle of least privilege, e.g. read-only APIs for public data. Use Cloud IAM to manage permissions.

Algorithms - Map out business logic, calculations, data transformations, analytics, machine learning models, etc. Leverage Cloud services like Dataflow and AI Platform.

UIs - Wireframes, page flows, style guides, client-side logic. Ensure mobile-friendly responsive design.

Logging - Structured logs for monitoring and debugging all components. Aggregate with Cloud Logging.

Engaging domain experts who will implement the designs is vital. Their experience surfaces edge cases and opportunities to refine implementations without wasted effort. For example, App Engine developers can recommend splitting front-end and back-end services to isolate scaling and security.

Choosing foundational software like OS, languages, frameworks, and databases affects operations and costs. While open source is free, it requires more effort for patches and upgrades. Managed platforms like Cloud Run reduce admin overhead at an added cost.

For example, running containerized microservices on Cloud Run avoids managing Kubernetes infrastructure yourself. But you lose fine-grained resource controls. There are always tradeoffs to evaluate.

Detailed designs enable building smooth-running, efficient systems. Collaborating with implementation teams ensures designs translate cleanly into production-ready code.

Development, Testing, and Deployment

Section titled “Development, Testing, and Deployment”Development teams build out system components based on the technical designs using coding languages, frameworks, and cloud services. They create executable artifacts like applications, functions, containers, schemas and scripts.

Artifacts are configured for environments like dev, test, staging, and prod. For example, separate Redis caches per environment. Load balancers and autoscaling rules match expected usage patterns.

Static analysis tools like cred scans, dependency checks, and vulnerability scanning are integrated in CI/CD pipelines to identity issues early. Unit and integration testing validate code modules before release.

End-to-end testing across staged environments shakes out bugs before production deployment. Stress/load testing verifies performance holds at peak levels.

Monitoring and logging are implemented for observability. Canary releases rollout changes to a subset of users first.

Deployment automation tools like Terraform and Cloud Build ship artifacts to environments reliably and repeatably. Zero downtime deployments are preferred over risky big bang releases. Rollbacks recover quickly from failures.

Documentation like runbooks, playbooks, and architecture diagrams are created alongside implementation. Immutable infrastructure patterns on containers simplify environment consistency.

In summary, development brings designs to life into hardened, production-ready implementations. Testing and automation help deploy those implementations rapidly, safely, and reliably. Careful configuration, testing, and documentation are essential for smooth cloud operations.

Maintenance

Section titled “Maintenance”Once successfully deployed, maintenance activities sustain ongoing operations of the solution:

Bug Fixes - Issues inevitably arise in production that require diagnosis and rapid patching. Monitoring alerts help surface problems early. Logs and debugging tools facilitate root cause analysis. Bug fixes aim to resolve specific defects without introducing regressions.

Enhancements - New features, capabilities, and integrations are needed over time to improve the product. Enhancements build upon the existing codebase vs. major rewrites. They go through the SDLC starting with scoping needs and designing changes.

Technical Debt Reduction - Shortcuts taken initially like tight coupling, incomplete implementations, or technical shortcuts accrue debt over time. Refactoring to modernize architectures, improve performance, and enhance resilience pays down this debt.

Upgrades - Frameworks, libraries, APIs, and cloud services eventually reach end-of-life and need upgrading. Kubernetes engine rolling upgrades exemplify non-disruptive approach.

Sun-setting - Retiring legacy solutions that have been replaced. Redirecting traffic, exporting data, and dismantling resources.

Ongoing maintenance sustains production health. Establish processes to continuously improve operations, reliability, efficiency and effectiveness. Monitor for performance, availability, and stability trends.

Leverage managed services to reduce maintenance overhead. Implement immutable infrastructure patterns for consistency. Automate testing to prevent regressions.

Evaluate when re-architecture is needed versus incremental improvements. Factor maintenance needs into solution designs and technical choices.

In summary, maintenance keeps solutions aligned with business needs through a culture of incremental, continuous improvement while remaining focused on end-user value.

Continuous Integration & Delivery

Section titled “Continuous Integration & Delivery”Continuous integration and deployment (CI/CD) automates building, testing, and releasing software changes rapidly and reliably handling all we’ve discussed on an automatic basis. CI/CD pipelines improve software quality and accelerate delivery to end users. Architects must design robust CI/CD workflows to unlock agility benefits. Google Cloud provides managed CI/CD services like Cloud Build, Cloud Source Repositories, and Cloud Deploy to simplify implementation.

Business Drivers to Adopt CI/CD

Section titled “Business Drivers to Adopt CI/CD”The first driver for CI/CD adoption is accelerating speed to market. Manual software release processes slow down delivery and cannot keep pace with the rapid rate of change expected by customers today. CI/CD automates testing and deployments enabling teams to safely release changes in hours or minutes versus weeks or months. Rapid iteration speeds new features, bug fixes, and innovations to customers faster.

The second driver is improving software quality. CI/CD bakes in testing from unit to integration to end-to-end levels for every commit. Issues are caught early before impacting users. Automated testing provides consistency across environments. Robust testing reduces risks from defects and outages caused by problematic changes. Higher quality improves customer satisfaction.

The third driver is increasing developer productivity. CI/CD eliminates tedious repetitive tasks like configuring test beds, running regressions, and deploying builds. Developers gain more time for innovation by offloading these roadblocks to automated pipelines. Self-service access enables releasing changes on demand. By systematically catching issues early, CI/CD also massively cuts down on wasteful rework. Developers can deliver more business value faster.

CI/CD’s compelling benefits around accelerating speed to market, improving software quality, and increasing developer productivity explain its widespread enterprise adoption. Businesses recognize CI/CD’s power to meet the rapid pace of change expected by modern customers.

CI/CD Elements

Section titled “CI/CD Elements”Continuous delivery systems are comprised of source control, build automation, testing suites, deployment orchestration, and runtime monitoring capabilities to enable push-button software releases, with core elements including version control repositories, build tools, test runners, container registries, orchestrators like Kubernetes, CI/CD platforms like Jenkins or Spinnaker, infrastructure provisioning through infrastructure-as-code tools, observability dashboards, and more.

When these capabilities for source control, build/test automation, and environment/deploy orchestration are tightly integrated and driven through code, it enables a “GitOps” approach to software delivery. With GitOps, the application source code repository acts as the single source of truth for both developers making changes as well as for the CI/CD tooling that builds, tests, packages and deploys those changes. Infrastructure definitions using infrastructure-as-code are versioned alongside the application code. Deployments and configuration changes are applied automatically on every code change merged to the main branch. Runtime monitoring checks for any drift between code definitions and system state. This tight feedback loop between git repository, automation tooling, and production environments powered by code gives DevOps teams end-to-end visibility and control of the entire software lifecycle.

Version control tools and strategies are instrumental in GitOps design planning, especially in an environment that leverages Google Cloud Platform (GCP). When preparing for the GCP Professional Cloud Architect exam, understanding how GitOps integrates with GCP services like Cloud Build, Cloud Source Repositories, and Kubernetes Engine is crucial. In GitOps, a version control system like Git serves as the ‘single source of truth’ for the declarative state of your infrastructure and applications. By treating infrastructure as code, you facilitate automated, reliable, and fast deployments, which is in line with many of the architectural best practices covered in the exam.

GCP services are built to work seamlessly with version control systems, enhancing the GitOps workflow. For instance, Google Cloud Build can be triggered to automate builds and deployments whenever there is a Git commit. Cloud Source Repositories, a fully-featured, scalable, and private Git repository service by GCP, can serve as your central Git repository, integrating directly with other GCP services. A Cloud Architect should understand how to design systems that incorporate these services for a cohesive GitOps workflow, an area of focus in the certification exam.

In GitOps, monitoring and observability are made simpler because changes are trackable and reversible through Git. Within the GCP ecosystem, monitoring solutions like Cloud Monitoring and Cloud Logging can be integrated into the GitOps pipeline to track performance metrics and logs in real-time. The ability to correlate deployments and changes with system behavior is beneficial for making informed architectural decisions. Therefore, a solid grasp of GitOps, backed by version control strategies, not only helps you implement efficient CI/CD pipelines but also prepares you for scenarios that might appear in the GCP Professional Cloud Architect exam.

Understanding the integration of version control tools and GitOps in a GCP environment is essential for two key reasons. First, it prepares you to build automated, secure, and efficient CI/CD pipelines, a crucial element in cloud architecture. Second, it equips you with knowledge that is directly applicable to topics likely to be covered in the GCP Professional Cloud Architect exam. Both of these benefits make version control and GitOps an indispensable part of your exam preparation and practical application.

Secrets

Section titled “Secrets”Secrets management is a critical component of cloud architecture and a focus area for anyone preparing for the GCP Professional Cloud Architect exam. The ability to securely handle sensitive information like API keys, access tokens, and certificates is crucial for maintaining the integrity and security of applications and services. Google Cloud Secret Manager, a fully managed service on GCP, provides a centralized and secure way to manage, access, and audit secrets. It allows Cloud Architects to set IAM policies, enabling fine-grained control over who can access what secrets, thereby contributing to a more robust security posture. Understanding the nuances of Secret Manager, such as versioning and audit logging, could well be a topic you encounter on the exam.

Apart from Google Cloud Secret Manager, popular vault systems like HashiCorp Vault are also widely used for secrets management. HashiCorp Vault not only provides features for storing secrets securely but also offers functionalities like secret generation, data encryption, and identity-based access. Given that the GCP Professional Cloud Architect exam may include hybrid or multi-cloud scenarios, understanding how HashiCorp Vault integrates with GCP resources is valuable. This can be particularly useful when dealing with workloads that span multiple cloud providers or even on-premises data centers.

One essential best practice to follow, which is likely to be endorsed in the GCP Cloud Architect exam, is the strict avoidance of storing secret values within code repositories. Even with private repositories, the risk associated with exposing sensitive information can lead to significant security vulnerabilities. Tools like git-secrets or pre-commit hooks can be configured to prevent accidental commits of secrets into version control systems. Also, both Google Cloud Secret Manager and HashiCorp Vault can integrate with CI/CD pipelines to provide secrets dynamically, mitigating the need to hardcode sensitive information in codebases.

A robust understanding of secrets management is indispensable for both practical application and preparation for the GCP Professional Cloud Architect exam. You’ll want to be versed in best practices like avoiding the storage of secrets in code repositories and understand the functionalities and limitations of secret management services like Google Cloud Secret Manager and HashiCorp Vault. Mastering these topics not only enhances the security posture of your cloud architecture but also prepares you for scenarios likely to appear in the certification exam.

Deployment

Section titled “Deployment”In the context of analyzing and defining technical processes, mastering the intricacies of Deployment Pipelines in Continuous Deployment is pivotal. A Deployment Pipeline is essentially a series of automated steps that allow software teams to reliably and efficiently release their code to the end-users. It includes building the code, running a suite of tests to detect bugs and vulnerabilities, and finally, deploying the code to production environments. For a Cloud Architect, especially one preparing for the GCP Professional Cloud Architect exam, understanding how to design and implement these pipelines on Google Cloud Platform using services like Cloud Build, Cloud Functions, and Google Kubernetes Engine is essential. These services, when properly configured, can automatically pick up code changes from repositories, build container images, and deploy them to orchestrated container platforms, thus bringing significant agility to the development cycle.

When developing deployment pipelines, certain technical processes are crucial for robustness and scalability. These include blue-green deployments, canary releases, and feature flags, which allow for minimal downtime and low-risk feature rollouts. The GCP Professional Cloud Architect exam often touches on how to architect such processes for scalability, fault-tolerance, and seamless rollbacks. For example, by leveraging Google Kubernetes Engine, you can effortlessly implement blue-green deployments by switching service labels between stable and new release versions. Additionally, Stackdriver, Google Cloud’s integrated monitoring, logging, and diagnostics host, can be woven into the pipeline to provide real-time insights and facilitate quicker decision-making.

Security also plays a vital role in deployment pipelines. Automated security checks, secret management, and compliance audits are part and parcel of the deployment process. Knowing how to integrate tools like Google Cloud Secret Manager for secure handling of API keys or credentials, and setting IAM policies to restrict pipeline access are skills that can set you apart. These considerations are not only imperative for real-world applications but are likely covered under the ‘Analyzing and Defining Technical Processes’ section of the GCP Professional Cloud Architect exam.

Understanding Deployment Pipelines in Continuous Deployment is vital for both your real-world applications and for acing the ‘Analyzing and Defining Technical Processes’ section of the GCP Professional Cloud Architect exam. Being proficient in implementing automated, secure, and scalable deployment processes using Google Cloud Platform’s array of services prepares you for complex architectural questions and scenarios you may encounter in the exam. Therefore, honing these skills is twofold beneficial, offering practical advantages while increasing your likelihood of certification success.

Secrets

Section titled “Secrets”Managing secrets securely is a critical element for anyone preparing for the GCP Professional Cloud Architect exam, especially when it comes to designing and implementing deployment pipelines. Google Cloud Secret Manager offers a centralized and secure way to manage sensitive information like API keys, access tokens, and certificates. Understanding how to leverage Secret Manager to inject secrets into CI/CD pipelines, which could be orchestrated using Google Cloud Build or Kubernetes Engine, is essential. Best practices such as fine-grained access control through IAM policies can ensure that only authorized services or personnel can access these secrets. Learning how to integrate Secret Manager with other GCP services for automated and secure secret retrieval during deployment phases will not only strengthen the pipeline but could also be a focus area in the certification exam. Moreover, knowing how to avoid common pitfalls like storing secrets in code repositories is pivotal for both exam success and real-world application security.

Troubleshooting and Post-mortem Culture

Section titled “Troubleshooting and Post-mortem Culture”Troubleshooting and post-mortem culture are essential aspects of Analyzing and Defining Technical Processes, particularly when aiming to pass the GCP Professional Cloud Architect exam. Mastery in troubleshooting implies not just fixing immediate issues but understanding the architecture well enough to anticipate and prevent future problems. GCP provides robust logging and monitoring tools like Cloud Monitoring and Cloud Logging that can be integrated into the incident response strategy. Knowing how to leverage these tools to identify bottlenecks or vulnerabilities can be an important part of the certification exam.

Post-mortem culture, on the other hand, involves the systematic review of incidents or failures to understand their root causes. Lessons learned from post-mortems often lead to preventive measures that improve system resilience and performance. Google Cloud’s suite of SRE (Site Reliability Engineering) tools can facilitate effective post-mortems by providing key data and insights. A strong grasp of these methodologies not only enhances your operational excellence but is likely to be a topic covered in the GCP Professional Cloud Architect exam.

Incident Post Mortems

Section titled “Incident Post Mortems”An incident refers to an unplanned event that disrupts the normal operation of a system or leads to a suboptimal user experience. Postmortems are the structured analyses performed after resolving the incident to uncover its root causes, learn from the event, and improve future responses. When preparing for the GCP Professional Cloud Architect exam, understanding incident management and the role of postmortems is crucial. These practices directly relate to Analyzing and Defining Technical Processes, a key domain in the certification. GCP offers specialized tools for incident monitoring and logging that can assist in both real-time troubleshooting and post-incident reviews. Mastery of these areas will better equip you for exam scenarios and real-world applications.

Learning from Incidents

Section titled “Learning from Incidents”When preparing for the GCP Professional Cloud Architect exam, a nuanced understanding of how to analyze and learn from both minor and major incidents is crucial. Minor incidents are those that cause limited impact on your system’s availability, performance, or user experience. Although they may seem inconsequential, overlooking them could lead to more significant issues in the long term. The key to managing minor incidents is rapid identification and containment. Tools like Google Cloud Monitoring can help you set up alerts for anomalies that indicate a minor problem, enabling quick action.

Another important aspect of dealing with minor incidents is documentation. While the incidents themselves might be minor, the patterns that emerge could indicate a larger, systemic issue. It’s crucial to log even small disruptions or glitches using a platform like Google Cloud Logging. Over time, this data can provide invaluable insights into the health and efficiency of your infrastructure, which can be crucial not just for the business but also for questions you might encounter on the GCP Professional Cloud Architect exam.

Immediate resolution should be the aim for minor incidents, but the learnings should contribute to preventive measures. After resolving the incident, run a lightweight postmortem to identify the root cause and recommend preventive actions. Though the solutions might be simple, such as code fixes or updates, their role in avoiding future incidents can be significant. Implement these preventive steps as part of a continuous improvement process, as it contributes to the stability and resilience of the system.

Lastly, minor incidents serve as a great training ground for incident response teams. They present an opportunity to improve response strategies and communication protocols without the pressure of a significant system failure. Periodic reviews of minor incidents, and the response strategies employed, can provide a wealth of knowledge to both your team and you as you prepare for the GCP Professional Cloud Architect exam.

On the other hand, major incidents are significant events that cause a noticeable impact on system performance, availability, or security. They demand immediate attention and rapid mobilization of resources. Google’s Site Reliability Engineering (SRE) principles emphasize the importance of immediate, coordinated action to mitigate the issue. When such incidents occur, it’s often necessary to establish an Incident Command System (ICS) to manage the situation efficiently. The ICS is a hierarchical structure that allows for clear command and communication lines, something often emphasized in GCP certification study material.

Post-incident, a thorough postmortem is non-negotiable. Unlike minor incidents, the postmortem for a major incident involves cross-functional teams and often requires intense scrutiny. Google Cloud Platform provides tools that allow for in-depth analysis and data mining, helping to unearth even the most obscured root causes. Each of these steps may be intricately described in your postmortem report, which should be reviewed and acted upon by all stakeholders.

Moreover, major incidents usually prompt a review of the architecture and the incident response plan. This often leads to significant changes aimed at ensuring the incident doesn’t recur. Such reviews and changes can be complex and time-consuming but are vital for the long-term health of your systems.

Additionally, the learnings from major incidents often lead to updates in policies, procedures, and perhaps even company culture. It’s essential to disseminate the learnings across the organization and, if appropriate, to external stakeholders. This is where Google Cloud’s vast array of documentation and information-sharing tools can come in handy.

Understanding how to deal with both minor and major incidents not only strengthens your real-world applications but also prepares you for the sort of complex, scenario-based questions you may encounter in the GCP Professional Cloud Architect exam.

Project Post-Mortems

Section titled “Project Post-Mortems”Analyzing and learning from project work and retrospectives are essential skills for a GCP Professional Cloud Architect. Project work often involves deploying and managing applications and services on Google Cloud Platform, and each project provides a unique learning experience. Utilizing built-in GCP features like Cloud Monitoring, Cloud Logging, and Data Studio can help you measure the success of deployments, infrastructure scaling, and other critical metrics. These tools not only provide real-time data but also offer historical views that can help identify trends, bottlenecks, or areas for improvement. Learning to interpret this data is crucial for both improving ongoing projects and for the analytical questions that might appear on the GCP certification exam.

Retrospectives, commonly employed in Agile frameworks, offer another rich avenue for learning. These scheduled reviews allow teams to discuss what went well, where they faced challenges, and how they can improve in the future. In the context of Google Cloud Platform projects, retrospectives can focus on optimizing resource utilization, improving security protocols through services like Identity and Access Management (IAM), or enhancing automation and CI/CD pipelines with tools like Cloud Build. Retrospectives should result in actionable items, with corresponding changes tracked over time for efficacy. This iterative process of feedback and improvement is fundamental in any cloud architect’s skill set and is highly likely to be a topic of interest in the GCP Professional Cloud Architect exam.

The practice of consistently analyzing project work and conducting retrospectives provides multiple benefits. First, it cultivates a culture of continuous improvement, essential for maintaining efficient, secure, and reliable cloud architecture. Second, the insights and lessons learned directly feed into better design and decision-making for future projects. Third, it prepares you for the GCP Professional Cloud Architect exam by ingraining best practices and a systematic approach to problem-solving. As the certification exam includes scenario-based questions that assess your ability to analyze and define technical processes, being adept at learning from project work and retrospectives is invaluable.

Enterprise IT Processes

Section titled “Enterprise IT Processes”Enterprise IT Processes form a cornerstone in the preparation for the GCP Professional Cloud Architect exam, particularly when it comes to Analyzing and Defining Technical Processes. Understanding the ITIL (Information Technology Infrastructure Library) model is vital, as it provides a standardized approach to IT service management. ITIL organizes its framework around four dimensions: Organizations and People, Information and Technology, Partners and Suppliers, and Value Streams and Processes. These dimensions help create a balanced focus across the enterprise, ensuring that technology services align with business goals.

ITIL management practices are categorized into three groups: General Management Practices, Service Management Practices, and Technical Management Practices. These categories collectively aim to provide a comprehensive guide to planning, implementing, and optimizing IT services, making ITIL a valuable framework for cloud architects to understand. This knowledge can be especially beneficial when answering scenario-based questions on the GCP Professional Cloud Architect exam that require a deep understanding of how to analyze and define complex technical processes within an organization.

Business Continuity

Section titled “Business Continuity”Business continuity and disaster recovery are not merely technical or operational concerns; they profoundly impact an organization’s most important asset—its people. Imagine a scenario where a critical internal service, such as an HR portal or a data analytics dashboard, experiences a catastrophic failure. It’s not just about data loss or a dip in sales metrics; it’s about the immediate disruption it causes in the day-to-day lives of employees who rely on these services to do their jobs efficiently. For a sales team, a CRM outage means an inability to track customer interactions or follow leads, directly impacting revenue. For HR, a system failure could affect everything from payroll processing to employee onboarding, leading to delays, confusion, and frustration. The ripple effects of such a breakdown can severely compromise employee morale and productivity, which, in turn, affect customer satisfaction and the bottom line.

To mitigate these risks, the first step in business continuity planning is conducting a Business Impact Analysis (BIA). This involves identifying the most crucial business functions and the resources needed to support them. A thorough BIA will evaluate the financial and operational impact of system unavailability, helping to prioritize recovery strategies. Employee dependencies on specific services should also be assessed, as their productivity is directly tied to the availability of these services.

The next critical component is formulating a disaster recovery plan, which should outline the steps needed to restore essential functions. This plan should detail the resources, personnel, and technologies required to recover from various types of disasters such as cyber-attacks, natural calamities, or infrastructure failures. Staff should be trained and well-versed in implementing the plan, and regular drills should be conducted to test its effectiveness.

- Disaster Plan: A guide outlining the specific actions to be taken in the event of various types of disruptions.

- Impact Analysis: An assessment identifying critical business functions and quantifying the impact of their unavailability.

- Recovery Plans: Detailed strategies for restoring essential business functions.

- Recovery Time Objectives: Timeframes within which systems, applications, or functions must be recovered after an outage.

Another crucial aspect of business continuity is setting Recovery Time Objectives (RTOs), which specify the maximum allowable downtime for various business processes. Achieving the defined RTOs requires implementing appropriate technology solutions, from redundant systems to automatic failover capabilities. These technologies must be tested rigorously to ensure they meet the needs outlined in the business impact analysis and disaster recovery plans.

In summary, business continuity planning is a multifaceted exercise that goes beyond mere technology fail-safes. It encompasses a deep understanding of business processes, a thorough analysis of various impact scenarios, comprehensive recovery strategies, and clear time objectives for restoring functionality. And at the heart of it all are the employees, whose productivity and well-being are directly influenced by the resilience and reliability of the systems they use daily. Therefore, every effort must be made to ensure that the business continuity and disaster recovery plans are robust, comprehensive, and regularly updated to adapt to evolving challenges.

Disaster Recovery

Section titled “Disaster Recovery”Disaster recovery (DR) planning is an integral component of a GCP Professional Cloud Architect’s role, especially when it comes to safeguarding an organization’s data and applications hosted on Google Cloud Platform. The GCP certification exam tests candidates on their capability to architect robust disaster recovery solutions, making it a critical subject of focus. Architecting a DR strategy on GCP involves choosing the right combination of services such as Cloud Storage, Persistent Disk snapshots, and other backup solutions, as well as planning for multi-regional deployments to ensure data availability even when an entire region faces issues. Mastery of these services and their proper implementation is vital for both exam preparation and real-world responsibilities.

One of the key aspects of DR planning on GCP involves designing for redundancy and high availability. GCP’s various data storage options, like Cloud SQL, Bigtable, and Datastore, offer built-in replication and failover capabilities. Understanding the nuances of these features, such as replication types and eventual or strong consistency models, will not only aid in successful disaster recovery but also in answering nuanced questions that may appear in the certification exam. Knowing when to use a multi-regional storage class versus a regional or nearline storage class can significantly impact an organization’s ability to recover quickly from a failure.

Creating and executing DR plans in GCP also involves automating backup processes and orchestrating recovery workflows. For this, Google Cloud offers specialized services like Cloud Scheduler for cron job automation and Cloud Composer for workflow orchestration. A GCP Cloud Architect needs to design these automated processes in a manner that minimizes the Recovery Time Objective (RTO) and Recovery Point Objective (RPO). Knowing how to configure, trigger, and monitor these services is often scrutinized in the GCP Cloud Architect exam, as it directly relates to one’s capability to create an effective DR plan.

Furthermore, the role of a GCP Cloud Architect extends to performing regular tests of the DR plans, including failover and failback exercises. This ensures that all team members understand their roles in the event of a disaster and that the plan itself remains effective as system configurations evolve. Google Cloud Platform provides robust logging and monitoring solutions, such as Cloud Monitoring and Cloud Logging, which enable architects to keep an eye on system health and performance metrics continuously. Familiarity with these tools is essential, as they help validate the DR strategy’s effectiveness and can offer insights for ongoing optimization.

Security also plays a pivotal role in disaster recovery planning. GCP’s robust Identity and Access Management (IAM) allows architects to define roles and permissions explicitly, thereby ensuring only authorized personnel can execute different parts of the DR plan. This layer of security is crucial in the larger schema of DR planning, ensuring that the recovery process itself doesn’t become a vector for security vulnerabilities. The understanding of IAM in a disaster recovery context is another area that the GCP Professional Cloud Architect exam could potentially explore.

In summary, a GCP Professional Cloud Architect has an expansive role in disaster recovery planning, from architecture and redundancy to automation, security, and ongoing testing. Expertise in these areas is not just crucial for executing this role effectively but also for succeeding in the GCP Cloud Architect certification exam. Therefore, it’s imperative to grasp the breadth of services and features offered by Google Cloud Platform that facilitate robust disaster recovery plans. Each component, from storage and data replication to automation and security, is a critical puzzle piece in architecting resilient systems capable of withstanding and recovering from unexpected adverse events.

Summary

Section titled “Summary”Software solutions require careful analysis, planning, development, testing, and ongoing maintenance. The software development lifecycle provides a structured approach to manage this process. It starts with gathering requirements by evaluating the problems to be solved, assessing potential solutions, analyzing needs, clarifying constraints, and defining the overall scope. The next phase focuses on solution design, including mapping system architecture, data models, infrastructure, integrations, interfaces, security controls, and disaster recovery. Detailed technical specifications are created to provide blueprints for development teams.

Development teams then build out the designed components using coding languages, frameworks, and cloud services. The resulting executable artifacts are configured for dev, test, staging and production environments. Testing validates code modules before release through practices like unit testing, integration testing, and end-to-end testing. Monitoring, logging and canary releases further harden releases before full production deployment. Automation tools assist with deployment, enabling frequent updates with minimal downtime and quick rollback when issues arise. Alongside implementation, documentation like runbooks and architecture diagrams are created.

Once in production, maintenance activities sustain operations. Bug fixes resolve issues without introducing regressions. Enhancements incrementally improve capabilities over time. Technical debt is paid down through refactoring and modernization. Components are upgraded before reaching end-of-life. Legacy solutions are retired after traffic redirection and data migration. Ongoing maintenance aligns solutions with evolving business needs through continuous incremental improvement.

Continuous integration and deployment (CI/CD) automates these processes through pipelines integrating version control, build automation, testing, and release orchestration. CI/CD accelerates speed to market, improves software quality through robust testing, and increases developer productivity by eliminating manual tasks. Core CI/CD components include source control repositories, build tools, test runners, container registries, orchestrators, infrastructure provisioning, observability dashboards, and deployment automation.

Troubleshooting involves not just fixing immediate issues but anticipating and preventing future problems through monitoring, logging, and post-incident analysis. Post-mortems foster improvement by systematically reviewing major incidents to understand root causes and prevent recurrence. Retrospectives similarly help teams learn from project experiences to optimize future work. These practices contribute to a culture of continuous improvement rooted in data-driven insights.

Exam Essentials

Section titled “Exam Essentials”Software development lifecycles provide structured processes for delivering solutions. Know key phases like requirements analysis, solution design, development, testing, deployment, documentation, and maintenance. Analysis should align solutions with business needs through problem scoping, solution option evaluation, and cost-benefit analysis. High-level designs define major components and interactions. Detailed designs specify data structures, algorithms, interfaces, infrastructure, security controls, and integrations. Development teams build designed components using coding, frameworks, and cloud services. Testing validates code before release through practices like unit, integration, end-to-end, load, and canary testing. Deployment automation enables rapid, reliable delivery with minimal downtime. Maintenance sustains operations through bug fixes, enhancements, debt reduction, upgrades and retirement of legacy systems.

Continuous integration and deployment (CI/CD) automates testing and releases through pipelines integrating version control, build tools, test runners, registries, orchestrators, provisioning tools and monitoring. Know how source control enables GitOps workflows in GCP through integration with Cloud Build and Cloud Source Repositories. Secrets management securely injects credentials into pipelines using tools like Secret Manager and Vault. Deployment best practices include blue/green, canary releases, and feature flags. Monitoring and logging facilitate troubleshooting and post-mortems.

Troubleshooting involves not just fixing immediate issues but anticipating and preventing future problems through monitoring, logging, and post-incident analysis. Post-mortems foster improvement by systematically reviewing major incidents to understand root causes and prevent recurrence. Retrospectives help teams learn from project experiences to optimize future work. These practices contribute to a culture of continuous improvement rooted in data-driven insights.

For business continuity planning, know the purpose of business impact analysis, disaster recovery plans, and recovery time objectives. Recovery strategies should focus on restoring prioritized business functions within target timeframes. Solutions encompass redundancy, backups, multi-region deployments, and failover automation. Regular testing validates effectiveness.

Disaster recovery on GCP leverages built-in data replication, automated backup processes, workflow orchestration, and multi-regional data availability. Recovery time and recovery point objectives guide design. Failover and failback testing ensures plan readiness. Identity and access management secures access. Monitoring tools validate design and uncover optimization opportunities.

Know ITIL service management framework, including the four dimensions: Organizations/People, Information/Technology, Partners/Suppliers, Value Streams/Processes. ITIL practices fall into three groups: General Management, Service Management, and Technical Management. ITIL provides standards for planning, delivering, and improving IT services across the enterprise.

In summary, focus on understanding end-to-end software delivery processes, CI/CD pipelines, troubleshooting methodologies, business continuity planning, disaster recovery design, and ITIL for service management. Know how to leverage GCP tools and best practices across these areas. Mastering technical processes demonstrates ability to analyze and define solutions aligned with business goals.